TECH

Why Grading AI Models is Like Judging a Baseball Game with New Rules Every Inning ?

SUMMARY

Evaluating AI models is a complex dance – a constant effort to navigate a landscape of rapid innovation, opaque inner workings, and ever-expanding capabilities. While definitively ranking AI models might be a fool's errand, there is still hope! By understanding the challenges, we can approach evaluation with a more critical eye.

AI has solved and shortened the developers time and cost by 20-40% , which is a big win for developers and engineers but it sometimes says to hit an axe in own foot but this is where comes the evolution in skills utilizing 100% out of it and again boosting the productivity it has proved in last few years. Coming to one of the big problems with AI.

The world of AI is a whirlwind of innovation. New AI models boasting superhuman capabilities in writing, coding, and problem-solving emerge seemingly every week. But with such rapid progress comes a crucial question: how do you know which AI model is truly the best fit for your needs? The truth is, evaluating AI models is a surprisingly intricate and multifaceted challenge. Here's why:

They're Everest-Sized Mountains, Not Pebbles on a Beach: Evaluating Large Language Models (LLMs)

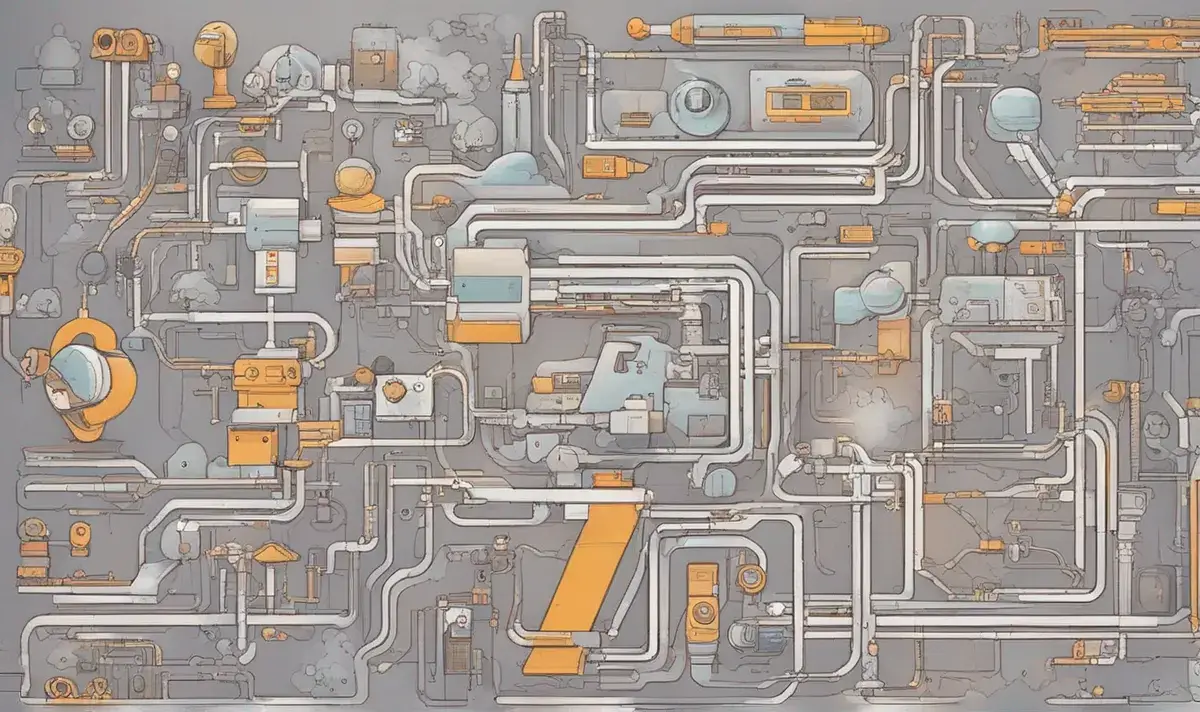

Unlike comparing simple gadgets or software, AI models are more akin to vast, interconnected platforms. Imagine a single model isn't just a program, but a complex ecosystem with dozens of sub-models working in concert. Asking your phone for directions doesn't just involve searching its internal memory; it taps into a intricate network of Google services to deliver the answer. This very complexity makes reviewing an AI model a snapshot in time, as the underlying structure and capabilities are constantly evolving.

Data Graveyards and Secret Recipes: The Opacity Problem in Machine Learning

Companies developing these AI models often treat their inner workings like the crown jewels. We have little to no insight into how they train their models or what data they feed them. It's like judging a chef's culinary skills without ever setting foot in their kitchen! This lack of transparency makes it incredibly difficult to assess potential biases or limitations within the AI models themselves.

The Data Deluge: Big Data and Algorithmic Bias

The sheer volume of data used to train these models is staggering. For instance, OpenAI's GPT-3 model was trained on a dataset of text and code containing an estimated 450 billion words. This vast amount of data makes it nearly impossible to pinpoint the exact origin of any potential biases or quirks within the model's outputs.

The Moving Target of "Capability": AI in Flux

AI models are capable of being used for an astounding array of tasks, many unforeseen by their creators. Imagine playing baseball, but with a new rule introduced every single inning. How do you judge a player's skill when the very game itself keeps changing? Similarly, it's difficult to definitively test an AI model's capabilities because they can be pushed and prodded in unexpected directions, performing tasks entirely outside their original design parameters.

Benchmarks: A Double-Edged Sword for AI Performance

Synthetic benchmarks exist to test AI models on specific tasks like answering trivia or identifying errors in writing. While these offer a starting point for evaluation, they come with limitations. Companies can "game the test" by optimizing their models for benchmark performance, and often only publish favorable results. Benchmarks don't capture the nuances of real-world use, like how naturally a model generates text or how well it handles open ended or unexpected questions.

The Elusive "Emergent Qualities": When AI Goes Off Script

AI models can sometimes exhibit surprising behaviors, like generating biased content or producing nonsensical outputs. These "emergent qualities" are difficult to predict or test for beforehand. It's like a baseball player suddenly turning into a ballet dancer mid-game! Evaluating AI models requires not just looking at headline features, but also anticipating these potential quirks and their impact on real-world performance.

Generative AI has played a role of startup booster where it helped as an assistant to most of the solopreneurs says Sai Krishna - Founder Superblog .

Inshorts:

🎯 Evaluating AI models: Like judging a constantly evolving game (AI evaluation, cutting edge AI)

🎯 Lack of transparency in AI: Makes it hard to assess potential biases (AI bias, responsible AI)

🎯 Big data and AI: The sheer volume of training data can mask hidden issues (big data in AI, AI challenges)

🎯 AI Benchmarking: Can be misleading and not capture real-world use (AI benchmarks, limitations of AI benchmarks)

🎯 Unexpected AI behavior: AI models can exhibit quirks that are hard to predict (unforeseen consequences of AI, AI ethics)

Latest News

STARTUP-STORIES